Human student.

I have a few friends from school in Canada who are writers and they have strong feelings about AI.

Human student.

I have a few friends from school in Canada who are writers and they have strong feelings about AI.

I’m starting to feel that similar to crypto currencies AI may be a bit overblown. It can do lots of interesting things, but it still needs human supervision to figure out if what it’s produced is good or utter garbage.

The main thing it seems to be capable of doing is generating personalized spam.

Agree.

I have been testing it at work (Copilot) and it has many limitations. It can be good at summarising and automating processes, but I would never trust its output when a degree of judgment and subjectivity was required. The technology is developing quickly, but I don’t see how you can teach AI lateral thinking and creativity.

When you get right down to it its just complex algorithms based on brute force data processing at scale, so it can only output conclusions based on the data it has been trained on. It cannot solve new problems well (no data for it) and it is also unable to make leaps in deductive logic (what we would call “hunches”) so you lose something there as well vs humans.

It is possible to “train” your own AI by talking to one every day (so that it absorbs your responses and trains on them) and that could be an interesting area for development in the future (for an individualised product that consumers might actually buy)

I’m not a fan of the comparison. Imo, generative AI is one of the most interesting tech developments in 20 years. And crypto is a crappy replacement for money which itself is a boring fact of life.

They just happen to be both pumped by the same tech bros, and might be less useful than wall street assumed.

I think the block chain idea has opportunity. Way less clear on the currency part. Seems like it could be bypassed for use in private, internal networks.

This is my take. AI is incredibly useful. It’s been elevated to a godlike technology at times, which is undeserved.

There will always be a place for human writers and human artists and human actuaries. For people such as writers and artists, the floor has been raised significantly. There will not be nearly as much room for mediocre writers and artists to be paid in the future - only to the extent that people choose to pay for something they could produce in 30 seconds, computer startup time inclusive.

AI is great at looking at what’s been done in the past and repeating, or mixing and repeating it. Your AI video of Trump and Putin waltzing is neat, and made of things that have already been made. They were put together in a very neat way.

We used to say that a thousand monkeys on a thousand typewriters would eventually produce the works of Shakespeare. We haven’t significantly moved from that - but they’re better. The monkeys have gotten smarter to recognize what words Shakespeare liked to use in which order, were injected with amphetamines, and produce that work much faster. (Obviously, they could immediately paste you the actual works, is a metaphor.)

Actuaries have not had their floor raised nearly as much. It budged. In the future, it might elevate a little more. Well, more accurately it almost surely will. How much remains to be seen, but I’m not concerned about my job. Would have to be an incredibly specifically-trained AI with flexibility and knowledge that doesn’t exist now and won’t soon. Perhaps my grandchildren should eschew being an actuary and just rent their brainpower to the Matrix and plug in for their job.

One day they will take over the Golden Gate Bridge.

I always get you mixed up with @SredniVashtar. Which is very unfair, because I think one of you is a relatively nice and cuddly animal, and I think the other smells like shit and probably has chlamydia.

We should form a partnership and start a wine label.

Where I feel our types of jobs (e.g. building models of systems) are safe is that even though we spend extensive time and effort and a great amount of care to build our models, and document our model and parameter choices, other humans don’t fully trust those models to stand on their own. I have a hard time believing that people would be more comfortable believing a black box model that could be skewed to the developers favour even more. On the other hand, my very senior manager did suggest we can replace our data collection efforts with AI and suggested that our stats/math heavy org might benefit from working with statisticians (we have several who are heavily involved in our work).

Many people talk about AI but how many have actually taken the time to understand the algorithms and how they work? Do people understand the difference between AI and classical statistics?

The Canadian government classifies regression models as AI, but they do distinguish between that and generative AI.

For me, AI is merely a collection of numerical recipes to estimate high dimensional functions. Canonical applications are in computer vision. Classical statistics is not terribly useful for these types of problems. The problem with AI is really about overfitting. If you have a lot of training data, it’s very easy to be fooled into thinking you’re onto something.

I’ve been dipping my toes into machine learning and multinomial logistic regression for classifying and aging fish ear bones (otoliths; similar to trees, they have rings which you can count to age). Using near infrared spectrometry you can get an idea of the chemical composition of the otolith and connect that to partial least squares regression or multinomial logistic regression to age them. From my understanding of the Canadian government’s stance, this is artificial intelligence. Instead of having a human counting the rings of a fish to determine its age, the age of the fish is now being determined by a computer interpreting the spectral signature it’s fed. Some people are using convoluted neural networks to do the same thing, but I don’t have enough data yet to try it myself.

Similarly, for otolith species ID, I feed the spectral signatures into a support vector machine learning algorithm or a partial least squares discriminant analysis and I can use that to figure out if an otolith is from a herring or a plaice. Again, this is a case of a computer making a determination that a human was previously needed to figure it out. As an interesting aside, I’ve found that these tools are better at determining “is this a herring otolith, yes or no?” than, “here is an assortment of 50 otoliths, tell me what they are?”. I guess it makes sense where you’re using 1 test vs many, but I don’t think many people realize this intuitively.

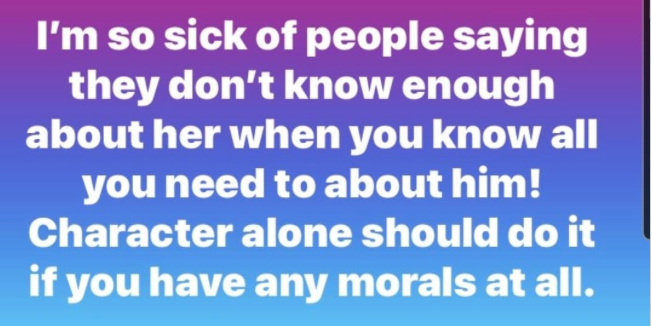

Here is a political truth: Trump asked for investigations of his foes while President. A regular Nixon, this guy.

Can someone freebie me this NYT article?

https://www.nytimes.com/2024/09/21/us/politics/trump-investigations-enemies.html

No, but related: Trump asked FCC to revoke ABC’s license over the debate. They said no, but what would the response have been had he been President? I would argue that the fact that he even asked for this should also be disqualifying, but whatever.