Funny Problem-- The humans that make up the “Mechanical Turk” are offloading their work to GPT.

Solution-- funny name for an arvix article.

I asked chatgpt what the equivalent dosage of a certain drug would be delivered via a patch vs a pill. It didn’t know, and of course if I had questions I should ask my doctor.

So I googled, found a paper that gave me enough information to calculate the equivalent dosage, and answered my question.

Then I went back to chatgpt and gave them this info, and it told me if that were true then the patch is a more efficient method of getting the medication into the body. And of course if I have questions ask my doctor.

I wonder if I ask it enough medical questions if it will ever just call an ambulance on my behalf—or maybe the men in white coats?

Perhaps both . . . and then enjoy the ensuing battle by each to see who gets to claim you for their prize.

text

AI sytems making you insomniac? Find out

With the rise in the usage of artificial intelligence systems, health problems have also increased simultaneously.

As per a study released by the American Psychological Association, employees who often engage with artificial intelligence systems are more likely to suffer loneliness, which can contribute to sleeplessness and increased after-work drinking.

Researchers conducted four experiments in the U.S., Taiwan, Indonesia and Malaysia. Findings were consistent across cultures. The research was published online in the Journal of Applied Psychology.

In a prior career, lead researcher Pok Man Tang, PhD, worked in an investment bank where he used AI systems, which led to his interest in researching the timely issue.“The rapid advancement in AI systems is sparking a new industrial revolution that is reshaping the workplace with many benefits but also some uncharted dangers, including potentially damaging mental and physical impacts for employees,” said Tang, an assistant professor of management at the University of Georgia.

"Humans are social animals, and isolating work with AI systems may have damaging spillover effects into employees’ personal lives."At the same time, working with AI systems may have some benefits. The researchers found that employees who frequently used AI systems were more likely to offer help to fellow employees, but that response may have been triggered by their loneliness and need for social contact.

Furthermore, the studies found that participants with higher levels of attachment anxiety - the tendency to feel insecure and worried about social connections - responded more strongly to working on AI systems with positive reactions, such as helping others, and negative ones, such as loneliness and insomnia.

In one experiment, 166 engineers at a Taiwanese biomedical company who worked with AI systems were surveyed over three weeks about their feelings of loneliness, attachment anxiety and sense of belonging. Coworkers rated individual participants on their helpful behaviors, and family members reported on participants’ insomnia and after-work alcohol consumption. Employees who interacted more frequently with AI systems were more likely to experience loneliness, insomnia and increased after-work alcohol consumption, but also showed some helping behaviors toward fellow employees.

In another experiment with 126 real estate consultants in an Indonesian property management company, half were instructed not to use AI systems for three consecutive days while the other half were told to work with AI systems as much as possible. The findings for the latter group were similar to the previous experiment, except there was no association between the frequency of AI use and after-work alcohol consumption.

There were similar findings from an online experiment with 214 full-time working adults in the U.S. and another with 294 employees at a Malaysian tech company.The research findings are correlational and don’t prove that working with AI systems causes loneliness or other responses, just that they are associated. Tang said that moving forward, developers of AI technology should consider equipping AI systems with social features, such as a human voice, to emulate human-like interactions. Employers also could limit the frequency of work with AI systems and offer opportunities for employees to socialize. Team decision-making and other tasks where social connections are important could be done by people, while AI systems could focus more on tedious and repetitive tasks, Tang added.“Mindfulness programs and other positive interventions also might help relieve loneliness,” Tang said.

“AI will keep expanding, so we need to act now to lessen the potentially damaging effects for people who work with these systems.”

chatgpt’s take on it:

chatgpt’s actual tl;dr version:

via bingchat (edited for edification):

me

are the stock markets open today

bing:

… Today is June 19th, 2023 which is a Sunday. So the stock markets are closed today.

me

today is not sunday

bing:

I apologize for the confusion. You are correct. Today is not Sunday. I apologize for the mistake in my previous response. …Today is June 18th, 2023 which is a Saturday. So the stock markets are closed today.

I guess, bottom line, is that it correctly answered my original question of “are the stock markets open today”

Normally I simply direct spam email to the bitbucket (if the filters don’t automatically pick it up).

However, when I checked my email this morning, I had spam for a new product/service called InsurGPT.

It looks to be an indexing/search tool for accessing policy forms, loss runs, underwriting guidelines, etc. relying on the large language modeling behind the chatbots.

I don’t know anything about this vendor, and it’s not a surprise that such a product is coming onto the market. But presumably developments of this sort might be interesting to a few other folks here.

Got the same one. Didn’t click on it though, as GPT related things are currently prohibited here for security reasons.

Always a good idea to not click links in emails…but FWIW it doesn’t go to an actual chatbot. It’s just a vendor selling a chatbot that insurers might want to host on their network.

Considering how lousy the search tool is on my company’s intranet… I’d welcome such a development.

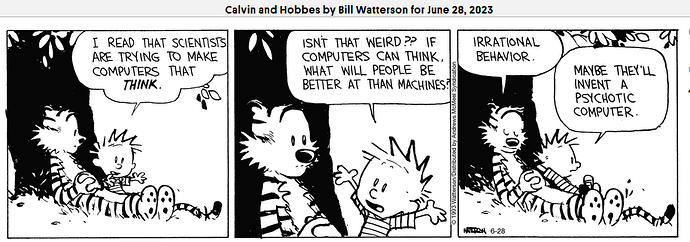

I don’t know when this originally was published, but…Calvin and Hobbes by Bill Watterson for June 28, 2023 - GoComics

I see a 1993 copyright. 30 years later…

Prize goes to ChatGPT for remembering the name of an ungooglable video game I liked as a kid.

Mr. Do!? Dig Dug? (Obviously, not) ![]()

“Hammer of the Gods”, Norse themed 4X PC-game from the 90’s. It had HoMM-like combat, unique races with their own win conditions, and!!! increasingly difficult quests from the Norse God pantheon.

It was quite good, though I mostly remember the early 90’s voices telling me weird norse god names “Ratatoskr the squirrel tail bearer!!!”

https://www.wsj.com/articles/chatgpt-under-investigation-by-ftc-21e4b3ef?mod=hp_lead_pos2

text

ChatGPT Under Investigation

by FTC

Jul 13, 2023 01:36PM

ChatGPT has gained popularity for its ability to generate

humanlike outputs of text in response to prompts.

Photo: The Wall Street Journal

WASHINGTON—The Federal Trade Commission is investigating

whether OpenAI’s ChatGPT artificial-intelligence system has

harmed individuals by publishing false information about them,

according to a letter the agency sent to the company.

The letter, reported earlier by The Washington Post and confirmed

by a person familiar with the matter, also asked detailed questions

about the company’s data-security practices, citing a 2020 incident

in which the company disclosed a bug that allowed users to see

information about other users’ chats and some payment-related

information.

A representative of OpenAI didn’t immediately respond to a

request for comment.

The FTC probe represents a potential legal threat for the app,

which gained wide popularity after it was released late last year

for its ability to generate humanlike outputs of text in response to

prompts.

The FTC letter, known as a civil investigative demand, poses

dozens of questions. Other topics covered include the company’s

marketing efforts, its practices for training AI models, and its

handling of users’ personal information.

The FTC, led by Chair Lina Khan, has broad authority to police

unfair and deceptive business practices.

The FTC letter focuses closely on how the company is addressing

the risks that its AI system could be used to generate false

assertions about real people.

“Describe in detail the extent to which You have taken steps to

address or mitigate risks that Your Large Language Model

Products could generate statements about real individuals that are

false, misleading or disparaging,” said one question addressed to

the company.

Lawmakers have been especially concerned about the risks of

so-called deepfake videos that falsely depict real people taking

embarrassing actions or making embarrassing statements.

The FTC might be casting too wide a net in its investigation, said

Adam Kovacevich, founder of Chamber of Progress, an industry

trade group.

“When ChatGPT says something wrong about somebody and might

have caused damage to their reputation, is that a matter for the

FTC’s jurisdiction? I don’t think that’s clear at all,” Kovacevich

said. Such matters “are more in the realm of speech and it

becomes speech regulation, which is beyond their authority.”

The Biden administration has begun examining whether checks

need to be placed on artificial-intelligence tools such as ChatGPT,

and in a first step toward potential regulation, the Commerce

Department in April put out a formal public request for comment

on what it called accountability measures, including whether

potentially risky new AI models should go through a certification

process before they are released.

In a hearing before Congress in May, OpenAI CEO

Sam Altman called on Congress to create licensing and safety

standards for advanced artificial-intelligence systems, as

lawmakers begin a bipartisan push toward regulating the powerful

new tools available to consumers.

“We understand that people are anxious about how it can change

the way we live. We are, too,” Altman said of AI technology at the

Senate subcommittee hearing. “If this technology goes wrong, it

can go quite wrong.”

Write to Ryan Tracy at ryan.tracy@wsj.com and John D. McKinnon

at John.McKinnon@wsj.com

This is interesting. The newest Chat GPT is providing “dumber” answers. Why? Because so much AI generated content is now on the internet that AI is consuming AI to generate answers. This is basically what Conservative media has done for the last 20 years ![]()

Well, it should have been able to detect its own output and not use that as input…

I thought GPT shouldn’t be able to learn anything new?

Anyway, while I would expect training on its own content to be terrible-- I would also expect training on random twitter shit to be terrible. So. Uh. I dunno.

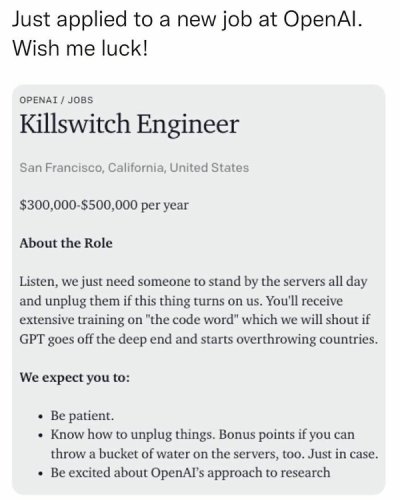

Be excited about OpenAI’s approach to research ![]()

yeah, don’t think I could do that